Let's Do a Coding Challenge

So for the last little bit I’ve been jobless. I wrote a whole post about it but haven’t posted it yet because after writing it I figured it was better to let that sit as one of those “write a letter but don’t send it until after you’ve had a good nights sleep” kind of thing. And so I wrote it, left it, and… yeah. There’s nothing particularly inflammatory or anything – at least, not as far as I can tell. However, I will be pulling out some things and writing posts on specific topics later.

Anyways!

Something I thought might be fun is to take some of the coding challenges I’ve gotten in the last few weeks and walking through the process of solving them. For shorter ones, I might also look into alternate solutions or ways to further optimize the solution I find.

As for what language I’ll be using for these, I’m going to be doing this using Go 1.19.

First Challenge: Pig Latin Encoder #

So here’s the first problem I’ll be tackling: I’m going to write some code that takes a string and “translates” each word into Pig Latin. I say “translates”, because what we’re really doing is using a cipher to encode some text, and I’ll be using “cipher” and “encode” from here on out. Mostly because that way I have a term for the thing we’re building ( ‘cipher’ ), and a different word for the operation it performs ( ’encode’ ).

To do that, I’ll be following these rules:

- If the word begins with a consonant, take the first letter of the word and move it to the end and then ad “ay”

- If the word begins with a vowel, just add “way” to the end of the word

Capitalization should respect the word, so “Hello world” should become “Ellohay orldway”, and same for punctuation.

Just so you’re all clear, here’s a few examples of what the encoding process should produce:

| English | Pig Latin |

|---|---|

| hello | ellohay |

| hello world | ellohay orldway |

| eat apples | eatway applesway |

| Hello, world! | Ellohay, orldway! |

So to sum up, here’s the “challenge”:

Write a Go package that can encode English text using the Pig Latin cipher.

However, I don’t want to stop there – so I’m adding the following bits to the challenge:

Write a command-line tool that uses the library to encode the provided text, whether the text is in a file or provided as arguments.

Write a web utility that can encode text sent to it.

Add ROT13 as a cipher.

Add the ability to decode text that has been encoded.

Sounds good? Good! Let’s dive in.

Also, quick note: I didn’t nail down that I’d be using “cipher” and “encode” instead of “translation” until I got a ways into writing this. Naming is hard, and I didn’t want to spend too much time thinking about naming until my subconscious figured it out for me. So if you see anything in here still saying “translation” or anything like that instead of using “cipher”, “encode”, or “decode” – make the appropriate substitution in your head.

Setting Up #

So, like any disciplined programmer, I’m going to do this TDD style. But where do I start, and where am I trying to get to?

So the first thing I need to figure out is folder structure. However, I only need to start with one folder: the one for the library. This is where the basic shared stuff will live – stuff that’s used by more than one cipher. I don’t need a folder for the web or command line tools yet, because I’m not touching those parts yet.

So I’m going to create the directory where I’ll be spending most of my time at

first, a library folder. However, I’m not going to call it library or

lib. Following some of Dave Cheney’s

wisdom

I’m going to give this folder a name that reflects the package I’ll be creating

and what it does. I’m not going to use pig-latin because package names can’t

have a dash in them ( and also we want to have more ciphers later on ); instead

I’m going to call it cipher.

Why A Library #

Really quick digression on why I’m designing this as a library, rather than just wrapping it up entirely in the command line tool or an HTTP-based API.

Well, there’s two reasons for this. The first is that I want to build multiple “front-ends” for the library. I’ve already mentioned these front-ends, it’s the command line tool and the HTTP API.

The other reason is that even if I was writing this for a work thing where only either a command-line tool OR an HTTP API was needed, I’d still write this library first in the manner you’ll see below. That’s because A) I don’t know if it’ll need more front-ends later, and B) writing code this way makes it easier to read and maintain later.

So to write this in a way that doesn’t have code snippets being copied and pasted all over the place1, I’m putting the core functionality into a library. This library will provide an API2 that can be used within another application; I’ll be showing how that works when I write the command-line tool, and later the HTTP API.

If I do this right, I should be able to write this library to do Pig Latin, write our command line & web tools, and get them working – then once that’s all good, add ROT13 without having to change anything ( or much of anything ).

First Up: Tests! #

So the first thing I need is some tests. I’m doing this TDD style, after all.

Fun thing about my editor: if I open up a file that’s clearly a Go test file, it fills it in with a basic test. This one, in fact:

1func TestPigLatin_Basics(t *testing.T) {

2 tests := []struct {

3 a, b, c int

4 }{

5 {1, 0, 1},

6 {1, 1, 2},

7 }

8

9 for i, x := range tests {

10 tt := x

11 t.Run(fmt.Sprintf("test%v", i), func(t *testing.T) {

12 c := tt.a + tt.b

13 if c != tt.c {

14 t.Errorf("something is seriously wrong, %v+%v != %v, got %v instead", tt.a, tt.b, tt.c, c)

15 }

16 })

17 }

18}Which is actually handy for me, because I can ensure that my brand-new install of Go 1.19 is working:

$ go test -v ./...

? github.com/seanhagen/pig-latinizer [no test files]

? github.com/seanhagen/pig-latinizer/cmd [no test files]

=== RUN TestPigLatin_Basics

=== RUN TestPigLatin_Basics/test0

=== RUN TestPigLatin_Basics/test1

--- PASS: TestPigLatin_Basics (0.00s)

--- PASS: TestPigLatin_Basics/test0 (0.00s)

--- PASS: TestPigLatin_Basics/test1 (0.00s)

PASS

ok github.com/seanhagen/cipherator/cipher (cached)

Neat!

Now to immediately delete all that auto-generated code.

My first step is to get to a “proper” failing test. Easiest way to do that is to call a function that doesn’t exist and expect a type that also doesn’t exist. That looks like this:

1func TestPigLatin_Basics(t *testing.T) {

2 var tr *PigLatin

3 var err error

4

5 tr, err = NewPigLatin()

6 assert.NotNil(t, tr)

7 assert.NoError(t, err)

8}I’m using the wonderful

stretchr/testify/assert package

here, because it helps keep tests nice and readable. So what do I get when I run

go test -v ./... again?

? github.com/seanhagen/cipherator [no test files]

? github.com/seanhagen/cipherator/cmd [no test files]

# github.com/seanhagen/cipherator/cipher [github.com/seanhagen/cipherator/cipher.test]

cipher/piglatin_test.go:10:10: undefined: PigLatin

cipher/piglatin_test.go:13:12: undefined: NewPigLatin

FAIL github.com/seanhagen/cipherator/cipher [build failed]

FAIL

Huzzah! Failure!

This might seem silly, but I’ve actually done something important: I’ve

verified our tests run properly. That previous run of go test showed me that

I can get a passing test, but that could have been a false positive. Writing

this new test shows me that I can get a failing test. It also helps me

confirm the Go compiler is working properly; if it somehow compiled this and ran

it I’d have bigger problems to sort out. That said, the only time I run that

first test is usually after I install a new version of Go 😅.

Moving forward I’m probably not going to write many more super simple tests like this. No promises though.

Next up, I’m going write another test that will test the two main pieces of our encoder: encoding a word that begins with a consonant, and one that begins with a vowel.

1func TestPigLatin_Words(t *testing.T) {

2 tests := []struct {

3 input, output string

4 }{

5 {"hello", "ellohay"},

6 {"eat", "eatway"},

7 }

8

9 for _, tt := range tests {

10 t.Run(fmt.Sprintf("%v to %v", tt.input, tt.output), func(t *testing.T) {

11 pl, err := NewPigLatin()

12 assert.NotNil(t, pl)

13 assert.NoError(t, err)

14

15 got, err := pl.Encode(tt.input)

16 assert.Equal(t, tt.output, got)

17 })

18 }

19}This of course fails:

# github.com/seanhagen/cipherator/cipher [github.com/seanhagen/cipherator/cipher.test]

cipher/piglatin_test.go:33:19: pl.Encode undefined (type *PigLatin has no field or method Encode)

FAIL github.com/seanhagen/cipherator/cipher [build failed]

Which makes sense, I don’t have an encode method. So I’ll write some code so I do!

1func (pl PigLatin) Encode(input string) (string, error ) {

2

3}But… what do I put in the function? Well, in TDD I’m supposed to write as little code as required to get the test to pass. So here’s what I’ll put in:

1func (pl PigLatin) Encode(input string) (string, error ) {

2 if input == "hello" {

3 return "ellohay", nil

4 }

5

6 if input == "eat" {

7 return "eatway", nil

8 }

9

10 return "", fmt.Errorf("don't know how to encode '%v' yet", input)

11}And hey, what do you know, it works:

=== RUN TestPigLatin_Basics

--- PASS: TestPigLatin_Basics (0.00s)

=== RUN TestPigLatin_Words

=== RUN TestPigLatin_Words/hello_to_ellohay

=== RUN TestPigLatin_Words/eat_to_eatway

--- PASS: TestPigLatin_Words (0.00s)

--- PASS: TestPigLatin_Words/hello_to_ellohay (0.00s)

--- PASS: TestPigLatin_Words/eat_to_eatway (0.00s)

PASS

ok github.com/seanhagen/cipherator/cipher 0.003s

Real Code Hours #

However, this isn’t really the solution, right? I can’t hard-code the correct

output for each possible input. Kind of goes against the whole reason to program

an algorithm, right? Well, now I can add another test case or two, and then

use that as my excuse to write the “proper” code. So let me add world and

apples as two more cases to my test table:.

1func TestPigLatin_Words(t *testing.T) {

2 tests := []struct {

3 input, output string

4 }{

5 {"hello", "ellohay"},

6 {"eat", "eatway"},

7 {"world", "orldway"},

8 {"apples", "applesway"},

9 }

10

11 for _, tt := range tests {

12 t.Run(fmt.Sprintf("%v to %v", tt.input, tt.output), func(t *testing.T) {

13 pl, err := NewPigLatin()

14 assert.NotNil(t, pl)

15 assert.NoError(t, err)

16

17 got, err := pl.Encode(tt.input)

18 assert.Equal(t, tt.output, got)

19 })

20 }

21}This should fail, and does:

=== RUN TestPigLatin_Basics

--- PASS: TestPigLatin_Basics (0.00s)

=== RUN TestPigLatin_Words

=== RUN TestPigLatin_Words/hello_to_ellohay

=== RUN TestPigLatin_Words/eat_to_eatway

=== RUN TestPigLatin_Words/world_to_orldway

piglatin_test.go:36:

Error Trace: cipherator/cipher/piglatin_test.go:36

Error: Not equal:

expected: "orldway"

actual : ""

Diff:

--- Expected

+++ Actual

@@ -1 +1 @@

-orldway

+

Test: TestPigLatin_Words/world_to_orldway

=== RUN TestPigLatin_Words/apples_to_applesway

piglatin_test.go:36:

Error Trace: cipherator/cipher/piglatin_test.go:36

Error: Not equal:

expected: "applesway"

actual : ""

Diff:

--- Expected

+++ Actual

@@ -1 +1 @@

-applesway

+

Test: TestPigLatin_Words/apples_to_applesway

--- FAIL: TestPigLatin_Words (0.00s)

--- PASS: TestPigLatin_Words/hello_to_ellohay (0.00s)

--- PASS: TestPigLatin_Words/eat_to_eatway (0.00s)

--- FAIL: TestPigLatin_Words/world_to_orldway (0.00s)

--- FAIL: TestPigLatin_Words/apples_to_applesway (0.00s)

FAIL

FAIL github.com/seanhagen/cipherator/cipher 0.003s

FAIL

Now it’s time to write some real code to solve this problem. So the first step is to remove our “working” code from before, leaving us with pretty much an empty function:

1func (pl PigLatin) Encode(input string) (string, error) {

2 return "", fmt.Errorf("don't know how to encode '%v' yet", input)

3}Wait, How DO I Do This? #

So let’s take a quick break from code and think about how we can solve this problem. There are a few different ways I can do this, but let’s start with a simple version.

How about this: we start with three slices3:

- one called

data, that holds the input exploded into single UTF-8 characters - another called

output, that will hold the output that will be returned - a last one called

currentWord, that holds the word currently being encoded

1func (pl PigLatin) Encode(input string) (string, error) {

2 var output, currentWord []string

3 data := strings.Split(input, "")

4

5 // code goes here

6

7 return "", fmt.Errorf("don't know how to encode '%v' yet", input)

8}This isn’t working yet, because I’ve defined three variables but haven’t used them yet. We’re getting to that, though.

Now that I’ve got these three slices, what do I do with them? Well, how about

looping through data, and putting each letter into currentWord. Do that

until the code hits a non-letter character or reaches the end of data. When it

hits a non-letter character or the end, take what’s in currentWord and

“encode” it, then put the encoded data into output. At the end of the

function, join output together into a single string and return it.

I’m going to need some helper functions though; I need to be able to identify if a character is a letter or not, and if it’s a space or not. So I put down the encoding stuff for a second, and write some more tests! If that sounds frustrating, think about it this way: writing tests is a way to force us to write the code we wanted to write next anyways – but in a way that ensures its testable! Handy, that.

Trying to write tests after the fact usually ends up with very odd tests that have to do way too much work to test the unit at hand, and are usually pretty fragile. By doing TDD we get test coverage (good) as well as easily testable code (very good).

1func TestPigLatin_IsLetter(t *testing.T) {

2 tests := []struct {

3 input string

4 expect bool

5 }{

6 {"a", true},

7 }

8

9 pl, err := NewPigLatin()

10 assert.NotNil(t, pl)

11 assert.NoError(t, err)

12

13 for _, tt := range tests {

14 t.Run(fmt.Sprintf("%v is letter %v", tt.input, tt.expect), func(t *testing.T) {

15 got := pl.isLetter(tt.input)

16 assert.Equal(t, tt.expect, got)

17 })

18 }

19}Of course, this fails at the moment:

# github.com/seanhagen/cipherator/cipher [github.com/seanhagen/cipherator/cipher.test]

cipher/piglatin_test.go:56:14: pl.isLetter undefined (type *PigLatin has no field or method isLetter)

FAIL github.com/seanhagen/cipherator/cipher [build failed]

FAIL

So let me get that passing. This one is pretty simple, not a lot to do here:

1// isLetter ...

2func (pl PigLatin) isLetter(in string) bool {

3 if in >= "a" && in <= "z" || in >= "A" && in <= "Z" {

4 return true

5 }

6 return false

7}After that, I go through a very similar process to write a test for isSpace

and the code to get the test to pass. Once that’s done, I can switch back to the

encoder and keep moving forward. Going to skip the test & code steps here, and

show you what I ended up with. There’s a bunch of code, but there are two

important pieces to show you.

First up we’ve got the function that handles the encoding bit:

1func (pl PigLatin) doTranslation(in []string) []string {

2 if len(in) == 0 {

3 return in

4 }

5

6 if pl.isUpper(in[0]) {

7 in[0] = strings.ToLower(in[0])

8 in[1] = strings.ToUpper(in[1])

9 }

10

11 var toAppend []string

12

13 if pl.isVowel(in[0]) {

14 toAppend = vowAppend

15 } else {

16 toAppend = conAppend

17 in = append(in[1:], in[0])

18 }

19

20 in = append(in, toAppend...)

21

22 return in

23}And the function that gets called by a user to encode a string:

1func (pl PigLatin) Encode(input string) (string, error) {

2 var output, currentWord []string

3 data := strings.Split(input, "")

4

5 for _, ch := range data {

6 // if it's a letter, append to currentWord

7 if pl.isLetter(ch) {

8 currentWord = append(currentWord, ch)

9 continue

10 }

11

12 // if there isn't anything in current word and we're not on a letter, just append

13 // the letter to output and continue on

14 if len(currentWord) == 0 && !pl.isLetter(ch) {

15 output = append(output, ch)

16 continue

17 }

18

19 // encode the word

20 currentWord = pl.doTranslation(currentWord)

21

22 // append currentWord to output

23 output = append(output, currentWord...)

24

25 // add the current character ( ie, not a letter, like spaces or punctuation )

26 output = append(output, ch)

27

28 // and reset currentWord to an empty slice

29 currentWord = []string{}

30 }

31

32 currentWord = pl.doTranslation(currentWord)

33 output = append(output, currentWord...)

34

35 return strings.Join(output, ""), nil

36}If you want to take a look at the whole file, as well as the tests you can go take a look at the repo where I’ve got this code.

Alrighty! I’ve got some code that handles encoding English into Pig Latin. Now what?

Well, from here we could do a few things.

One is I could move on to the command line tool, and start writing that. I could also do the web utility first, for a bit of fun. However, I want to do some refactoring. This code isn’t quite as good as it could be, in my opinion.

For example, my Encode method takes in a string as its only argument and

then everything internally is handled with strings. But it feels a bit weird to

use a data type that can hold more than one character at a time (ie, strings)

when I’d like to use a data type that can only hold one character at a

time.

That way I can change the helper methods from expecting a string to this new

data type, and they’d make a bit more sense. Basically, being able to pass in

“apple” to isUpper feels a bit weird – I want to have the code force the “one

character at a time” restriction on my helper methods.

Also, I’m exploding a string and doing a bunch of slice management. That feels a bit heavy; there’s got to be a solution we can use to clean that up too, right?

Refactoring Our Way To… Something #

So let’s think about these two design goals we want to achieve. The first is to

move away from the string data type to one that can only hold a single

character at a time. The other is to see what we can do to move away from slices

if possible; or if we can hide those behind our own data type or something from

the standard library.

For the first new goal, Go has the handy rune data type. I’m not going to go

into the technical details, but if you want to check out this blog post on the

go.dev site that goes over the difference between

strings, runes, and characters.

So how does this change our code? Well, for one it actually lets me remove a line of code straight away:

data := strings.Split(input, "")

See, if I just loop over our input like so:

for _, r := range input {}

The type of r is rune, not string – pretty handy!

Of course, this will require a bunch of changes to my code. So starting with tests, let’s do this!

Where to start? Well, I want to start by changing each of our helper methods so

that they each take a rune instead of a string as their argument. I force

myself to make this change by updating the tests so that they’re passing in a

rune. For example, We can change TestPigLatin_IsLetter so that the test

table is set up like so:

1 tests := []struct {

2 input rune

3 expect bool

4 }{

5 {'a', true},

6 {'B', true},

7 {'', false},

8 {' ', false},

9 {'!', false},

10 {'1', false},

11 }Of course, making that change causes a few… issues:

cipher/piglatin_test.go:52:4: illegal rune literal

I expected an error, but maybe not this one – that’s pointing to this line:

1 tests := []struct {

2 input rune

3 expect bool

4 }{

5 {'a', true},

6 {'B', true},

7 {'', false},

8 {' ', false},

9 {'!', false},

10 {'1', false},

11 }Turns out, there is no “empty rune” in Go. Good to know – hopefully that will

make things a bit easier and let me remove some code. But first I’ve got to

remove all the “empty string” tests. Once that’s done, I continue doing this

refactoring until I’ve replaced as much usage of string with rune as I can.

Once that’s done, what’s changed? To be honest, not a ton. Some of our helper

functions now just wrap functions from the unicode package:

1// isLetter ...

2func (pl PigLatin) isLetter(in rune) bool {

3 return unicode.IsLetter(in)

4}

5

6// isVowel ...

7func (pl PigLatin) isVowel(in rune) bool {

8 for _, v := range vowels {

9 if in == v {

10 return true

11 }

12 }

13

14 return false

15}

16

17// isUpper ...

18func (pl PigLatin) isUpper(in rune) bool {

19 if !pl.isLetter(in) {

20 return false

21 }

22

23 return unicode.IsUpper(in)

24}

25

26// isSpace ...

27func (pl PigLatin) isSpace(in rune) bool {

28 return unicode.IsSpace(in)

29}That’s good, less code is always appreciated! Especially code from the standard

library. Other than that, the only other real change is using a string builder

to turn our output slice into a string at the end. It feels a bit icky having

this at the end of my Encode function:

1 outWr := strings.Builder{}

2 for _, r := range output {

3 outWr.WriteRune(r)

4 }

5

6 return outWr.String(), nilBefore implementing that change though, I’m going to tackle removing slices first. Don’t get me wrong; slices are fine, but what would be real nice is using stuff from the standard library so that I’m not working at such a low level. Or to word that better: I want the code inside each function to be working with the same levels of abstraction. This means if I want to use something like a string builder, the rest of the function shouldn’t mix in low-level primitives like slices. Of course, there’s exceptions to every rule, this one isn’t any different. The point though, isn’t strict adherence to every single rule; the point is to create readable, understandable, and maintainable code. That means not forcing another developer4 to keep low-level concepts in their head at the same time as high-level concepts.

What kind of solution could we use so that we’re abstracting away these slices?

What about a token parser? What’s that, you ask? Simple! Well, simple-ish.

Parsing Parsing Parsing #

So what are tokens, actually? Well let’s start by getting a bit more specific. In this context, I’m specifically talking about “lexical tokens”. Basically, taking a sentence like this:

Hello there, world!

And turning it into a list like this:

| Token Type | Value |

|---|---|

| Word | Hello |

| Space | |

| Word | there |

| Symbol | , |

| Word | world |

| Symbol | ! |

As you can see, we’ve got three ’types’ of tokens: words, spaces, and symbols. So what we’re going to do seems pretty straightforward: take our input string, turn it into a series of tokens, and then tell each token to encode itself. Actually implementing that will be a bit more work. So, let’s dive in!

Note from future Sean: I didn’t take the idea of tokens as far as I imagined here – the code didn’t need to get that complicated to solve the problem. This is actually one of the benefits of doing TDD in my mind; by writing a test focused on the problem I didn’t get caught up in writing the wrong solution. Or at least not an over-engineered solution.

First, we start by taking a look around the standard library to see what there is we could use. Turns out, there is! It’s called text/scanner, and we can use it to turn a string into a series of simple tokens. The best part is that we can customize how it parses the input; meaning we can use it to split our input string up exactly as we want!

However, I’ve never used text/scanner before – so I’m going to have to play

around with this package to see how it works. So let’s play around a bit! First,

I copied one of the examples from the

godoc page, and modified

it a bit:

1package main

2

3import (

4 "fmt"

5 "strings"

6 "text/scanner"

7)

8

9func main() {

10 str := "Hello, world! this is a test string 12345 ### $5 . what"

11 var s scanner.Scanner

12 s.Init(strings.NewReader(str))

13 for tok := s.Scan(); tok != scanner.EOF; tok = s.Scan() {

14 fmt.Printf("%s: %s\n", s.Position, s.TokenText())

15 }

16}Running that gives me this:

example:1:1: Hello

example:1:6: ,

example:1:8: world

example:1:13: !

example:1:15: this

example:1:20: is

example:1:23: a

example:1:25: test

example:1:30: string

example:1:37: 12345

example:1:43: #

example:1:44: #

example:1:45: #

example:1:47: $

example:1:48: 5

example:1:50: .

example:1:52: what

In other words, the default settings for text/scanner.Scanner almost turn

Hello, world! this is a test string 12345 ### $5 . what into exactly what we

need. At the moment, it’s dropping spaces. Lucky for us, fixing that is pretty

straight-forward!

1func main() {

2 str := "Hello, world! this is a test string 12345 ### $5 . what"

3 var s scanner.Scanner

4 s.Init(strings.NewReader(str))

5 s.Filename = "example"

6 s.Whitespace ^= 1<<'\t' | 1<<' '

7 for tok := s.Scan(); tok != scanner.EOF; tok = s.Scan() {

8 fmt.Printf("%s: '%s'\n", s.Position, s.TokenText())

9 }

10}Adding that s.Whitespace line gives us this output:

example:1:1: 'Hello'

example:1:6: ','

example:1:7: ' '

example:1:8: 'world'

example:1:13: '!'

example:1:14: ' '

example:1:15: 'this'

example:1:19: ' '

example:1:20: 'is'

<cut for length>

Perfect!

So next step is to use this to parse the input string into a bunch of tokens. But how do I do that? What tests do I change, or add?

Let’s take a step back for a moment. There are two5 ways I could go about

this. The first is that I could replace the loop we’ve got right now with one

that just uses a text/scanner.Scanner. I wouldn’t have to change any tests at

all, as I’d basically just be refactoring. The goal would be to re-implement

this feature with new code that still passes the old tests.

However, when trying to just implement the scanner version while changing as

little as possible, I very quickly run into an issue. All my helper functions

are built around rune, and not string tokens. Right now, the code is set up to

go through the input, rune by rune to build each token manually. These ’tokens’

are just slices of runes that I pass into a encoding function.

How would I have to change the code to make the scanner version work? Well,

let’s start by looking at the documentation for the text/scanner – maybe

there’s something there I can use? And because I’m writing this in the past, of

course there is: Peek()!

Changing the scan test code to look like this:

1func main() {

2 str := "Hello, world! this is a test string 12345 ### $5 . what"

3 var s scanner.Scanner

4 s.Init(strings.NewReader(str))

5 s.Filename = "example"

6 s.Whitespace ^= 1<<'\t' | 1<<' '

7 fmt.Printf("first peek: %c\n", s.Peek())

8 for {

9 n := s.Peek()

10 tok := s.Scan()

11 if tok == scanner.EOF {

12 break

13 }

14

15 fmt.Printf("%s: '%s', peek: %c\n", s.Position, s.TokenText(), n)

16 }

17}Produces this output:

example:1:1: 'Hello', peek: H (eof: false)

example:1:6: ',', peek: , (eof: false)

example:1:7: ' ', peek: (eof: false)

example:1:8: 'world', peek: w (eof: false)

example:1:13: '!', peek: ! (eof: false)

example:1:14: ' ', peek: (eof: false)

example:1:15: 'this', peek: t (eof: false)

<cut for length>

Neat! Even better, Peek() returns a rune! So I can peek before scanning a

token to get the first rune from the next token, and use that with our helper

functions perhaps?

Another note from future Sean: I misread the docs on how

Peek()works compared toScan(); somehow my brain completely missed that what I wanted from it was to get a first line that looks like this:example:1:1: 'Hello', peek: , (eof: false). Thankfully, the way I misinterpreted this ended up working 😅 – don’t worry though, I eventually figure this out and fix the code so it’s not doing this specific silly thing any more. However, as I figured this out a few days after writing this section I’m just going to leave this in, mostly because trying to re-write this section just isn’t going to happen.

After making those changes, here’s what I’ve got now:

1func (pl PigLatin) Encode(input string) (string, error) {

2 var output []string

3

4 pl.s.Init(strings.NewReader(input))

5 pl.s.Filename = "original"

6 pl.s.Whitespace ^= 1<<'\t' | 1<<' '

7

8 for {

9 ch := pl.s.Peek()

10 tok := pl.s.Scan()

11 if tok == scanner.EOF {

12 break

13 }

14

15 if pl.isLetter(ch) {

16 currentWord := pl.encodeStr(pl.s.TokenText())

17 output = append(output, currentWord)

18 continue

19 } else {

20 output = append(output, pl.s.TokenText())

21 }

22 }

23

24 return strings.Join(output, ""), nil

25}

26

27func (pl PigLatin) encodeStr(in string) string {

28 var d []rune

29 for _, ch := range in {

30 d = append(d, ch)

31 }

32 d = pl.encodeRunes(d)

33 out := strings.Builder{}

34 for _, ch := range d {

35 out.WriteRune(ch)

36 }

37 return out.String()

38}Nice! Now there’s only one slice: the output slice that goes back to being a

slice of strings. I wrote encodeStr to transform the string from the scanner

into the slice of runes expected by encodeRunes ( which used to be

doTranslation ), but I’m not really a fan. However, this works well enough for

now that I can move onto making the command line app/tool/thing. I’ll clean this

up later when I come back around to do other ciphers.

If you want to see all the code as it is now, you can check out the ‘refactoring’ branch on GitHub.

Command Line Fun #

Okay, so I’ve got the library now, time to build out the command line tool.

I’m going to be using Cobra to build the command line tool. One of the things that Cobra gives me is really easy sub-commands. For those of you who aren’t that familiar with the command line, a sub-command is basically an argument to the command line tool that causes it to do different things.

For example, let’s pretend I wanted to build a simple command line tool that can

upload an image or get a list of images you’ve uploaded; let’s call it img. I

could use flags to do these things; img -u <filename> could upload a file,

img -l would list your uploaded images. However, flags aren’t super easy to

remember, and figuring out what they do often requires heading to the

documentation. Instead, I can use sub-commands so that instead of img -u <filename> you could write img upload <filename>; instead of img -l you

could write img list.

I’m going to take advantage of this so that I can have each cipher I want to be

it’s own sub-command. This command is going to be called cipherator, because

that’s hip and edgy6. Next I have to decide which “thing” will be the

next-level sub-commands: the cipher to use, or the operation to perform. In

other words, do I want to have cipherator <cipher> <operation> or cipherator <operation> <cipher>?

Because I may end up with way more ciphers than operations, I think cipherator <cipher> <operation> makes more sense. That’s because this way I can have a

“Pig Latin” command with two sub-commands ( encode and decode ), instead of an

“encode” command with a sub-command for each cipher I want to support. Now to

be clear, this is mostly a personal preference / organization thing. I’m not

trying to say one of these is better than the other; just that I had to make a

choice and I prefer cipherator <cipher> <operation>.

At the moment, I’ve only got one cipher: Pig Latin. As for operations, I’ve

only got encode – ie, turning English into Pig Latin.

Now, how does the command get the input? I think the easiest thing is for it to

have one flag: -f/--file <filename>. If this flag is present, it treats the

argument to that flag as a text file to open and encode. If the flag isn’t

present, it treats all other non-flag arguments as the strings to encode. In

other words, if we have a file named “copy.txt” with the contents “hello world”,

these two invocations of cipherator should produce the same output:

cipherator piglatin encode -f copy.txtcipherator piglatin encode hello world

I think it’d be nice to also have a flag that lets us redirect the output to a

file; let’s go with -o/--output <filename>. That way, we could do cipherator piglatin encode -f copy.txt -o pl.txt and have our copy.txt translated and

put into pl.txt.

However, working with files will come a bit later – I want to do some refactoring before adding the ability to read from a file or to output to a file. For now the command will just take input as additional arguments.

Enough Planning, Write A Command Line Tool Already #

Okay, okay.

So, full disclosure: this is going to be the first time I’ll be writing a Cobra-backed command line tool using TDD. I’ve written a handful of CLI apps using Cobra in the past, I’ve just never tested the CLI app or written one using TDD.

The first thing we need to do is set up our sub-commands. We need a ‘Pig Latin’ sub-command, and that needs an ’encode’ sub-command. However, I don’t want to have to write the code that deals with input & output over and over again. In fact, all the commands need the same two things:

- an

io.Readerto read text from - an

io.Writerto write the output to

Interfaces in Go are pretty great, and these two provided by the io package

are among some of the best. Almost every Go library or application that needs to

deal with input and/or output probably uses ( or should be using ) these

interfaces.

So what we’re going to do is define the two flags ( -f/--file for input,

-o/--output for output ) on the root command, rather than on any of the

sub-commands. Does this mean you could type in cipherator -f <filename>?

Yes. Would it do anything? No. It might be a bit silly, but I’m going to run

with this for now.

First off, we’ve got to figure out some tests. To do that, we need to figure out

what we’re testing. For our first test, we could check that when you run the

base command – cipherator, in our case – that it prints out the help

text. Sounds good!

Here’s what we end up with:

1func TestCmd_Root(t *testing.T) {

2 output := bytes.NewBuffer(nil)

3 expect := helpText

4

5 rootCmd.SetOutput(output)

6 err := rootCmd.Execute()

7 assert.NoError(t, err)

8 assert.Contains(t, output.String(), expect)

9}If you’re curious, I’m using assert.Contains instead of assert.Equals

because the help text changes as soon as you add a sub-command. This way I’m

just testing that the initial help text is being output as expected.

Then there’s the root command, so the test passes:

1package main

2

3import (

4 "github.com/spf13/cobra"

5)

6

7const helpText = `Cipherator is a CLI tool for encoding and decoding English text using

8a variety of "toy" ciphers such as Pig Latin.`

9

10// rootCmd represents the base command when called without any subcommands

11var rootCmd = &cobra.Command{

12 Use: "cipherator",

13 Short: "Encode/decode text using various toy ciphers",

14 Long: helpText,

15}

16

17func main() {

18 cobra.CheckErr(rootCmd.Execute())

19}Nice! So that’s the first step done. Next up, our Pig Latin command. Initially, it looks pretty similar to our root command:

1package main

2

3import "github.com/spf13/cobra"

4

5const piglatinHelpText = `Cipherator is a CLI tool for encoding and decoding English text using

6a variety of "toy" ciphers such as Pig Latin.`

7

8var piglatinCmd = &cobra.Command{

9 Use: "piglatin",

10 Short: "Encode/decode text using the Pig Latin cipher",

11 Long: piglatinHelpText,

12}

13

14func init() {

15 rootCmd.AddCommand(piglatinCmd)

16}The test is basically the same as the root command:

1func TestCmd_Piglatin(t *testing.T) {

2 output := bytes.NewBuffer(nil)

3 expect := piglatinHelpText

4

5 piglatinCmd.SetOutput(output)

6 err := piglatinCmd.Execute()

7

8 assert.NoError(t, err)

9 assert.Contains(t, output.String(), expect)

10}But this test fails:

=== RUN TestCmd_Piglatin

Cipherator is a CLI tool for encoding and decoding English text using

a variety of "toy" ciphers such as Pig Latin.

Usage:

cipherator [command]

Available Commands:

completion Generate the autocompletion script for the specified shell

help Help about any command

Flags:

-h, --help help for cipherator

Additional help topics:

cipherator piglatin Encode/decode text using the Pig Latin cipher

Use "cipherator [command] --help" for more information about a command.

piglatin_test.go:18:

Error Trace: /home/sean/Code/Go/src/github.com/seanhagen/cipherator/cmd/piglatin_test.go:18

Error: "" does not contain "Cipherator is a CLI tool for encoding and decoding English text using\na variety of \"toy\" ciphers such as Pig Latin."

Test: TestCmd_Piglatin

--- FAIL: TestCmd_Piglatin (0.00s)

=== RUN TestCmd_Root

--- PASS: TestCmd_Root (0.00s)

FAIL

FAIL github.com/seanhagen/pig-latinizer/cmd 0.005s

FAIL

Why is that?

Well, turns out Cobra needs some extra prodding to work the way you expect. I had to change a few things, but managed to get this working.

The first step was to use the root command, but set the arguments so it calls our sub-command.

1func TestCmd_Piglatin(t *testing.T) {

2 output := bytes.NewBuffer(nil)

3 expect := piglatinLongHelpText

4

5 rootCmd.SetArgs([]string{"piglatin"})

6 rootCmd.SetOutput(output)

7 err := rootCmd.Execute()

8

9 assert.NoError(t, err)

10 assert.Contains(t, output.String(), expect)

11}The second step, which highlights an issue, was to update the test for the root command to do something similar:

1func TestCmd_Root(t *testing.T) {

2 output := bytes.NewBuffer(nil)

3 expect := rootHelpText

4

5 rootCmd.SetArgs([]string{""})

6 rootCmd.SetOutput(output)

7 err := rootCmd.Execute()

8

9 assert.NoError(t, err)

10 assert.Contains(t, output.String(), expect)

11}Why did I have to go back and change the test for the root command?

Global variables.

Because the root command is defined outside of any function or struct like so:

1var rootCmd = &cobra.Command{It means that the root command variable in both tests is the same

thing. Now, this might not seem like a big deal, but as I’ve already run into

one weird issue because of global variables I’m going to take a moment to

refactor all this to not use them at all. I don’t want these tests to become

flaky or hard to understand because I kept using the default global variables

Cobra sets up by default when you use cobra to generate the initial files for

you.

Here’s where we’re at now:

1// in piglatin_test.go

2func TestCmd_Piglatin(t *testing.T) {

3 output := bytes.NewBuffer(nil)

4 expect := piglatinLongHelpText

5

6 cmd := getPigLatinCommand()

7 cmd.SetOutput(output)

8 err := cmd.Execute()

9

10 assert.NoError(t, err)

11 assert.Contains(t, output.String(), expect)

12}

13

14// in root_test.go

15func TestCmd_Root(t *testing.T) {

16 output := bytes.NewBuffer(nil)

17 expect := rootHelpText

18

19 rootCmd := getRootCommand()

20 rootCmd.SetOutput(output)

21 err := rootCmd.Execute()

22

23 assert.NoError(t, err)

24 assert.Contains(t, output.String(), expect)

25}

26

27// in root.go

28func getRootCommand() *cobra.Command {

29 return &cobra.Command{

30 Use: "cipherator",

31 Short: "Encode/decode text using various toy ciphers",

32 Long: rootHelpText,

33 }

34}

35

36// in piglatin.go

37func getPigLatinCommand() *cobra.Command {

38 return &cobra.Command{

39 Use: "piglatin",

40 Short: piglatinShortHelpText,

41 Long: piglatinLongHelpText,

42 }

43}

44

45func setupPigLatinCommand(root, plc *cobra.Command) {

46 root.AddCommand(plc)

47 // set up any flags below here

48}

49

50// in main.go

51func main() {

52 root := getRootCommand()

53

54 piglatin := getPigLatinCommand()

55 setupPigLatinCommand(root, piglatin)

56

57 cobra.CheckErr(root.Execute)

58}Huzzah! No more global variables, and tests can run in parallel without messing with each other. Very good.

Okay, so now it’s time to add the sub-command for encoding, right?

Well…

Detours & Refactoring #

Here’s the thing about letting your subconscious figure stuff out for you:

sometimes it takes a little while. Remember how I said earlier that I felt that

cipherator <cipher> <operation> made more sense? My subconscious figured out a

better way to set this command up, but it requires switching to cipherator <operation> <cipher> to make it work.

The reason is that before I was thinking that <cipher> and <operation> would

all be sub-commands. But if I switch it around, then I can have something like

this ( very much not real code, just an example ):

1var exampleEncodeCommand = &cobra.Command{

2 Use: "encode <cipher>",

3 Short: "Encode some text using the named cipher.",

4 Long: `Use one of the built-in ciphers to encode some text.

5

6Use the 'list-ciphers' command to see the list of built-in ciphers`,

7 RunE: func(cmd *cobra.Command, args []string) error {

8 useCipher := args[0]

9 toEncode := args[1:]

10

11 enc, err := cipher.GetEncoder(useCipher)

12 if err != nil {

13 return fmt.Errorf("'%v' is not a known cipher", useCipher)

14 }

15

16 enc.Encode(strings.Join(args[1:], " "))

17 cmd.OutOrStdout().Write(enc.Bytes())

18

19 return nil

20 },

21 }That highlighted part is the reason for the change to cipherator <operation> <cipher>.

Why is this version better, though? Simple: because it doesn’t require that the command line tool know anything about what ciphers are available. This way, if the caller of the API asks for a cipher not defined, we can return an error. This might seem a bit silly; there’s only one cipher at the moment!

When I add ROT13 though, why should that involve updating the command line tool?

The only thing that should change is the cipher package. This should be the

goal – because then I won’t forget to update both packages when a new cipher is

added later.

Also: I’ve got some ideas on how to improve the encoder7.

So, what does this mean? Well, I need to go back to our cipher package and

make some changes. The main one will be to add the “get a cipher” function,

which will take an argument that defines which encoder to return, and returns

either the encoder that was asked for – or an error.

Again, starting with a test in cipher_test.go:

1func TestCipher_GetEncoder(t *testing.T) {

2 var enc Encoder

3 var err error

4

5 enc, err = GetEncoder(EncoderTypePiglatin)

6

7 assert.NoError(t, err)

8 assert.IsType(t, &PigLatin{}, enc)

9}And then some implementation:

1//go:generate go-enum -f=$GOFILE --marshal

2

3package cipher

4

5import "fmt"

6

7// EncoderType ...

8// ENUM(piglatin, rot13)

9type EncoderType int32

10

11// Encoder

12type Encoder interface {

13 Encode(string) (string, error)

14}

15

16// GetEncoder ...

17func GetEncoder(t EncoderType) (Encoder, error) {

18 switch t {

19 case EncoderTypePiglatin:

20 return NewPigLatin()

21 }

22

23 return nil, fmt.Errorf("%v is an unknown encoder type", t.String())

24}Here I’m using the fantastic go-enum package to generate some enum values. If you want to see what was generated, go take a look on GitHub. Other than that, pretty straight-forward.

There is more I could do, but I need to stay focused on our current task:

finishing the command line tool. There are other changes I could make to the

cipher package, but those should wait until later8.

Okay, so what’s next? Back to the command line package and let’s write some

tests. So the new way this will work is by calling the command with the

operation first and the cipher second, like this: cipherator encode piglatin <text>. So first up I need an encode command.

1func TestCmd_EncodeNoFlags(t *testing.T) {

2 encPig := cipher.EncoderTypePiglatin.String()

3

4 tests := []struct {

5 cipher string

6 input []string

7 expect string

8 error bool

9 }{

10 {encPig, []string{"hello world"}, "ellohay orldway", false},

11 {encPig, []string{"hello", " ", "world"}, "ellohay orldway", false},

12 {encPig, []string{"h", "e", "l", "l", "o", " ", "w", "o", "r", "l", "d"}, "ellohay orldway", false},

13 {"nope", []string{"hello world"}, "hello world", true},

14 }

15

16 for i, tt := range tests {

17 t.Run(

18 fmt.Sprintf("test %v cipher %s input %s expect %s error %v", i, tt.cipher, tt.input, tt.expect, tt.error),

19 func(t *testing.T) {

20 output := bytes.NewBuffer(nil)

21

22 cmd := getEncodeCommand()

23 args := append([]string{tt.cipher}, tt.input...)

24

25 cmd.SetArgs(args)

26 cmd.SetOutput(output)

27 err := cmd.Execute()

28

29 if tt.error {

30 assert.Error(t, err)

31 } else {

32 assert.NoError(t, err)

33 assert.Equal(t, tt.expect, output.String())

34 }

35 },

36 )

37 }

38}After writing the test, I updated the command:

1func getEncodeCommand() *cobra.Command {

2 return &cobra.Command{

3 Use: "encode <cipher>",

4 Short: encodeShortHelpText,

5 Long: encodeLongHelpText,

6 RunE: func(cmd *cobra.Command, args []string) error {

7 useCipher := args[0]

8 toEncode := strings.Join(args[1:], "")

9

10 c, err := cipher.ParseEncoderType(useCipher)

11 if err != nil {

12 return fmt.Errorf("unable to parse cipher name: %w", err)

13 }

14

15 enc, err := cipher.GetEncoder(c)

16 if err != nil {

17 return fmt.Errorf("unable to fetch cipher: %w", err)

18 }

19

20 output, err := enc.Encode(toEncode)

21 if err != nil {

22 return fmt.Errorf("unable to encode using the '%v' cipher: %w", c.String(), err)

23 }

24

25 _, err = cmd.OutOrStdout().Write([]byte(output))

26

27 return err

28 },

29 }

30}And it all works! Neato. Can I build the command and run it?

$ go build -o ciph ./cmd

$ ./ciph encode piglatin hello world

Error: 0x5431a0

Apparently not!

Turns out the issue was this bit in main.go:

1func main() {

2 root := getRootCommand()

3

4 enc := getEncodeCommand()

5 setupEncodeCommand(root, enc)

6

7 cobra.CheckErr(root.Execute)

8}That line needed to be this instead:

1 cobra.CheckErr(root.Execute())That done, I can run the command:

$ go build -o ciph ./cmd

$ ./ciph encode piglatin hello world

elloworldhay

Hrmmm. That’s not quite correct, but the fix turns out to be pretty easy. See, the arguments on the command line aren’t passed in as a single string. In other words, this:

$ ./ciph encode piglatin hello world

When the arguments finally end up inside the encode command, what I get is

this: []string{"piglatin","hello","world"}.

Is there an easy fix for this?

Well, how about changing this:

toEncode := strings.Join(args[1:], "")To this:

toEncode := strings.Join(args[1:], " ")That works!

This means that the tests have to change though – specifically, by changing the tests to be just these three:

{encPig, []string{"hello world"}, "ellohay orldway", false},

{encPig, []string{"hello", " ", "world"}, "ellohay orldway", false},

{"nope", []string{"hello world"}, "hello world", true},Okay, cool! At this point how am I doing according to the todo list of tasks?

Write a Go package that can encode English text using the Pig Latin cipherWrite a command-line tool that uses the library to encode the provided text, whether the text is in a file or provided as arguments- Write a web utility that can encode text sent to it

- Add ROT13 as a cipher

- Add the ability to decode text that has been encoded

Groovy! Up next, web app thing!

CLI to HTTP #

So now it’s time to put this thing online. Being a web developer who is “Not Great™” at front end stuff at the best of times, this is going to be just an API. I’ll leave building a fancy page with HTML and JavaScript up to you.

So I’ll just be building a little HTTP-based API that has a route to do the encoding. I want the route to be laid out in a similar fashion as the arguments to the CLI tool, so the route is going to look like this:

POST /encode/<cipher>

The text to get encoded will be in the HTTP POST body. In other words, we should be able to do the following:

$ curl -X POST "https://ciphernator.site/encode/piglatin" --data-raw "hello world"

ellohay orldway

Before diving into testing, I’m going to think about how to organize this

code. There are kind of two ways I could go about this. One would be to create

a ‘web’ or ‘api’ package, and put everything for the web service in there. The

other way would be to move what’s currently in cmd into cmd/cli, and then

create a cmd/web. In that new folder, I could create the server binary – ie,

this is where the main() function for the web server would live.

Because most of what I’m writing is going to be HTTP-related code, the second

option feels a bit better to me. This way, all of the packages with a main()

are grouped under the cmd folder, instead of being spread all over our

repository. The idea of having every main() live inside the same folder within

a repository is super pleasing to me; it’s a bit of organization that just feels

good, you know?

Okay, so I’m using cmd/web for the HTTP server stuff. Are there any other

packages I might need? I’m not sure, but that’s what writing tests is for!

Diving in, eventually I end up with this:

1func encodeHandler(w http.ResponseWriter, r *http.Request) {

2 vars := mux.Vars(r)

3 c, ok := vars["cipher"]

4 if !ok {

5 w.WriteHeader(http.StatusBadRequest)

6 return

7 }

8

9 et, err := cipher.ParseEncoderType(c)

10 if err != nil {

11 w.WriteHeader(http.StatusBadRequest)

12 return

13 }

14

15 enc, err := cipher.GetEncoder(et)

16 if err != nil {

17 w.WriteHeader(http.StatusInternalServerError)

18 return

19 }

20

21 text, err := io.ReadAll(r.Body)

22 if err != nil {

23 w.WriteHeader(http.StatusInternalServerError)

24 return

25 }

26

27 out, err := enc.Encode(string(text))

28 if err != nil {

29 w.WriteHeader(http.StatusInternalServerError)

30 return

31 }

32

33 w.WriteHeader(http.StatusOK)

34 _, _ = fmt.Fprintf(w, out)

35}It works, but it doesn’t quite feel… great. There’s a lot of stuff going on

there, and while there’s only got one level of indentation with the if

statements, it’s still a bit longer than I’d like. Thankfully, because there are

unit tests, I can start changing this without having to worry about breaking

stuff. So long as the tests keep passing I can keep moving forward with changes!

A little bit more work, and here’s what I’ve got now:

1func encodeHandler(w http.ResponseWriter, r *http.Request) {

2 defer r.Body.Close()

3

4 enc, err := getRequestEncoder(r)

5 if err != nil {

6 w.WriteHeader(http.StatusInternalServerError)

7 return

8 }

9

10 err = encodeRequest(w, r.Body, enc)

11 if err != nil {

12 w.WriteHeader(http.StatusInternalServerError)

13 return

14 }

15

16 w.WriteHeader(http.StatusOK)

17}

18

19func getRequestEncoder(r *http.Request) (cipher.Encoder, error) {

20 vars := mux.Vars(r)

21 c, ok := vars["cipher"]

22 if !ok {

23 return nil, fmt.Errorf("'cipher' not a valid key in request vars")

24 }

25

26 et, err := cipher.ParseEncoderType(c)

27 if err != nil {

28 return nil, fmt.Errorf("unable to parse encoder type: %w", err)

29 }

30

31 return cipher.GetEncoder(et)

32}

33

34func encodeRequest(w io.Writer, r io.Reader, enc cipher.Encoder) error {

35 text, err := io.ReadAll(r)

36 if err != nil {

37 return fmt.Errorf("unable to ready request body: %w", err)

38 }

39

40 out, err := enc.Encode(string(text))

41 if err != nil {

42 return fmt.Errorf("unable to encode input: %w", err)

43 }

44

45 _, err = fmt.Fprintf(w, out)

46 return err

47}I’ve pulled the two actions being taken in the request handler into two separate functions. The first handles getting the cipher encoder, the second handles actually encoding the text we send in our request.

Making Some Improvements #

Let’s take a closer look at encodeRequest though, I think there’s still some

work I can do to improve it.

The biggest code smell to me is that I’m using io.ReadAll. At the very least I

should be wrapping the request body in a io.LimitedReader so that we can try

to protect ourselves against someone trying to crash our site by sending a

never-ending stream of data. Also, I don’t like that I need read all the data

first, then cast it to a string in order to pass it into Encode. What would be

really great is if the encoder worked a little bit more like the json Encoder

type.

Basically, what I’d like to end up with is something a bit more like this:

1func encodeRequest(w io.Writer, r io.Reader, enc cipher.Encoder) error {

2 if err := enc.WriteTo(w); err != nil {

3 return err

4 }

5

6 return enc.ReadFrom(r)

7}Or even better, refactoring some of the other code as well, I could end up with something more like this:

|

|

Or at least something like that. None of that is what I’m eventually going to end up writing; think of it as pseudo-code that just happens to look a lot like Go.

In any case, this will require changing some stuff in the library package. I’ve

got to add a new constructor that will accept an io.Writer, and I’ve got to

add a method that accepts an io.Reader. On top of that, there’s whatever other

changes I’ll need to make so that it can read from that io.Reader and write

the encoded text to that io.Writer.

After playing around for a while, I know how I want to proceed with these changes. Unfortunately it’s going to mean re-writing some stuff. If this was a library already being used by other folks I’d look for a different way to do this. However, I’m going to pretend we’re still in the “pre-release” phase of this project, and we haven’t shipped Version 1.0.0 quite yet.

So after doing some tests, reading some docs, and thinking about this for a bit, I think I know how I’m going to proceed.

Keeping The Old Stuff, But Improving It #

First up, for small bits of text it’d be nice to be able to do either of the following:

1encoded, err := piglatin.Encode(input)

2// OR

3encoded, err := cipher.Encode(cipher.EncoderTypePigLatin, input)If you know you only are going to be dealing with smaller bits of text, and you only want Pig Latin, you can use the first. If you’re going to be dealing with various ciphers, but still small amounts of text, you can use the second.

Handling io.Writer Instead Of Strings #

Next up, handling io.Writer as an argument. There are two versions of this I’m

going to implement. As a side note, from now on I’ll only be showing the

piglatin package – there will be a similar function in the cipher package

that has an additional argument that specifies which cipher to use.

1// version 1, write to a provided io.Writer

2buf := bytes.NewBuffer(nil)

3err := piglatin.EncodeTo(input, buf)

4

5// version 2, create an encoder and then use it later

6enc, err := piglatin.New(buf)

7err := enc.Encode(input)The reason I want to have these three different ways to interact with the

encoder is pretty simple. The first is that keeping Encode(string) won’t break

previous tests. The second is that while creating and holding on to an encoder

makes sense in a web application, it makes a bit less sense in a command line

tool that is only “alive” for a (hopefully) short period of time. Anyways!

What I Ended Up With #

After about half an hour of work, I think I’m happy with where the code is at now. Let’s take a look!

First up there’s the changes to the Pig Latin encoder:

1func New(wr io.Writer) (*Encoder, error) {

2 return &Encoder{wr}, nil

3}

4

5func Encode(in string) (string, error) {

6 out := bytes.NewBuffer(nil)

7 err := EncodeTo(in, out)

8 return out.String(), err

9}

10

11func EncodeTo(in string, wr io.Writer) error {

12 pl, err := New(wr)

13 if err != nil {

14 return err

15 }

16 return pl.EncodeFromString(in)

17}

18

19func (spl *Encoder) EncodeFromString(in string) error {

20 read := strings.NewReader(in)

21 return spl.readInto(read, spl.output)

22}Nice! And best of all, this required barely any changes to our other packages –

just the change from accepting a string to accepting an io.Reader in Encode.

Take a look at our handler now:

1func encodeHandler(w http.ResponseWriter, r *http.Request) {

2 enc, err := getRequestEncoder(w, r)

3 if err != nil {

4 w.WriteHeader(http.StatusInternalServerError)

5 return

6 }

7

8 err = enc.Encode(r.Body)

9 if err != nil {

10 w.WriteHeader(http.StatusInternalServerError)

11 return

12 }

13

14 w.WriteHeader(http.StatusOK)

15}Very nice! Taking another look at our list:

Write a Go package that can encode English text using the Pig Latin cipherWrite a command-line tool that uses the library to encode the provided text, whether the text is in a file or provided as argumentsWrite a web utility that can encode text sent to it- Add ROT13 as a cipher

- Add the ability to decode text that has been encoded

We’ve built that web utility, and now it’s time to add another cipher! Let’s see what we need to do to add ROT13!

Adding ROT13 #

Turns out, I don’t need to do much!

First up, our ROT13 function – which I shamelessly borrowed from a StackOverflow answer and then modified a bit:

1func rot13(r rune) rune {

2 capital := r >= 'A' && r <= 'Z'

3 if !capital && (r < 'a' || r > 'z') {

4 return r // Not a letter

5 }

6

7 r += 13

8 if capital && r > 'Z' || !capital && r > 'z' {

9 r -= 26

10 }

11 return r

12}After that I, uh, went a bit overboard. I’m not going to paste it in here because this post is already somewhat long; instead you can go look at the code on GitHub. I will walk you through it a bit here though.

Basically, I found that in the io package there are two other handy “XReader”

interfaces, where “X” can be rune or byte. Because I’m working with runes,

my preference would be that I just use a io.RuneReader. However, not all

io.Readers can be io.RuneReaders. So using some handy methods from the

uft8 package I wrote some other encoders that work with bytes. Honestly I

could have just gone with the one that uses the io.Reader but it was fun

coding up the three different ways that these interfaces from the io package

can all accomplish the goal of “encode each rune using ROT13”.

When Not To Improve #

One thing that you may notice is that most of the encode functions look pretty similar to the Pig Latin one. In fact, let’s do a little test: which encoder is the following code from:

1// New ...

2func New(wr io.Writer) (*Encoder, error) {

3 return &Encoder{wr}, nil

4}

5

6// Encode ...

7func Encode(in string) (string, error) {

8 out := bytes.NewBuffer(nil)

9 err := EncodeTo(in, out)

10 return out.String(), err

11}

12

13// EncodeTo ...

14func EncodeTo(in string, wr io.Writer) error {

15 pl := &Encoder{wr}

16 return pl.EncodeFromString(in)

17}

18

19// EncodeFromString ...

20func (spl *Encoder) EncodeFromString(in string) error {

21 read := strings.NewReader(in)

22 return spl.Encode(read)

23}Is this a chance to refactor out some common code? It is! However, that’s not the real question to ask right now: should we refactor out this common code?

Well, the func Encode(in string) (string,error) and func EncodeTo(in string, wr io.Writer) error functions can’t be refactored away… can they? Could we do

something like the following:

1func Encode = cipher.EncodeUnfortunately no, we can’t alias functions the way we can with

types

in Go. Those two functions have to stay. We could create a type in the cipher

package that looks something like this:

1type BaseEncoder struct {

2 encodeFn func(io.Reader) error

3}

4

5func (be *BaseEncoder) EncodeFromString(in string) error {

6 read := strings.NewReader(in)

7 return be.encodeFn(read)

8}

9

10func (be *BaseEncoder) Encode(r io.Reader) error {

11 return be.encodeFn(r)

12}And then use it in one of our encoders like so (I’m using the ROT13 encoder as an example):

1type Encoder struct {

2 *cipher.BaseEncoder

3 wr io.Writer

4}

5

6func New(wr io.Writer) (*Encoder, error) {

7 // handle setup

8}Unfortunately, that’s still not going to work. Remember the New(t EncoderType, wr io.Writer) (Encoder, error) method in the cipher package? Well, for that

to work we have to import each package that provides an encoder recognized by

our encoder types:

1func New(t EncoderType, wr io.Writer) (Encoder, error) {

2 switch t {

3 case EncoderTypePiglatin:

4 return piglatin.New(wr)

5 case EncoderTypeRot13:

6 return rot13.New(wr)

7 }

8

9 return nil, fmt.Errorf("%v is an unknown encoder type", t.String())

10}See how I’m calling piglatin.New(wr) on line 4, and rot13.New(wr) on line 6?

I’m only able to do that because I’m importing both those packages like so:

1import (

2 "fmt"

3 "io"

4 "strings"

5

6 "github.com/seanhagen/cipherator/cipher/piglatin"

7 "github.com/seanhagen/cipherator/cipher/rot13"

8)So if you’re not familiar, Go doesn’t allow circular imports. Those are

situations where package A imports package B, and package B imports

package A. The import chain can be longer than that, too – it doesn’t matter

how many packages sit on the chain between A and B; if they both end up

importing each other the compiler tosses out an error.

In order to refactor out the common code, I need a package to put it

in. The best place is in the cipher package – but we can’t import anything

from the cipher package inside of the piglatin or rot13 packages because

that would cause a circular import and cause the compiler to fail. I could make

a “base” encoder package that lives inside the cipher package, but then we’d

be committing a naming

no-no.

For now I’m just going to leave this. Copying the 18 lines of

code

that make up the first few functions in the rot13 or piglatin packages isn’t

a big deal for now. Instead, I’m going to move on to the next task.

Decoding What Has Been Encoded #

Back to the list:

Write a Go package that can encode English text using the Pig Latin cipherWrite a command-line tool that uses the library to encode the provided text, whether the text is in a file or provided as argumentsWrite a web utility that can encode text sent to itAdd ROT13 as a cipher- Add the ability to decode text that has been encoded

Looks like the last thing left to do is to decode text.

We’re going to start off with another interface:

1type Decoder interface {

2 DecodeString(string) error

3 Decode(io.Reader) error

4}Pretty much the same as the Encoder interface. I’m also creating a composite

interface as well:

1type Handler interface {

2 Encoder

3 Decoder

4}I’m calling it Handler for now because I can’t think of a good name at the

moment, and Cipher creates stutter when you realize it would be used as

cipher.Cipher.

Next up, a choice: do I update New so that it returns Handler instead of

Encoder?

I don’t know why I’m asking you, not only can’t you answer – I’ve already made

up my mind! I am going to make that change, because it won’t break backwards

compatibility. Anything expecting a cipher.Encoder will still work with a

cipher.Handler!

After that, time to update the rot13 package so that it can decode! To start

off I’m going to create two helper methods similar to the encode helper

functions: Decode(string) (string, error) and DecodeTo(string, io.Writer) error. After those, I’ll add a DecodeFromString(string) error and a

Decode(io.Reader) error method to the rot13.Encoder type.

Decoding ROT13 #

I’m starting with ROT13 because it’s much easier to decode; just apply ROT13 again and you’re golden! That makes writing the decoder super simple:

1package rot13

2

3import (

4 "bytes"

5 "io"

6 "strings"

7)

8

9// Decode ...

10func Decode(in string) (string, error) {

11 buf := bytes.NewBuffer(nil)

12 err := DecodeTo(in, buf)

13 return buf.String(), err

14}

15

16// DecodeTo ...

17func DecodeTo(in string, wr io.Writer) error {

18 rt := Encoder{wr}

19 return rt.DecodeString(in)

20}

21

22// DecodeFromString ...

23func (e *Encoder) DecodeString(in string) error {

24 read := strings.NewReader(in)

25 return e.Decode(read)

26}

27

28// Decode ...

29func (e *Encoder) Decode(r io.Reader) error {

30 return e.Encode(r)

31}That’s it, the entirety of the code required to decode ROT13.

But what about…

Decoding Pig Latin #

This is where things get a bit more fun. As a quick refresher, here’s the rules for encoding English into Pig Latin:

- If the word begins with a consonant, take the first letter of the word and move it to the end and then ad “ay”

- If the word begins with a vowel, just add “way” to the end of the word

What does this produce for various inputs?

| Input | Output |

|---|---|

| hello | ellohay |

| eat | eatway |

| by | ybay |

| at | atway |

| world | orldway |

| apples | applesway |

| I | Iway |

| a | away |

| way | ayway |

Well, the first thing we find out is that the shortest possible “word” in Pig Latin is four characters. The only single-letter words in the English language we care about are “I” and “a”; both are vowels and would have the full “-way” suffix attached when they get encoded. But do we need to care about the length of the token we’re processing if it’s a word and not a symbol? Not really; the rules to encode have nothing to do with the length of the word, just if it starts with a vowel or not.

Now, I could add a rule that handles “I” and “a” by saying “if the encoded text is four letters long, remove the last three”. Or I could just figure out the “proper” rule for decoding words that start with a vowel; that will work regardless of whether the word is “I”, “eat”, or “electroencephalographers”.

So how do we decode words that start with a vowel, like turning “eatway” into “eat” – and how do we differentiate between a word that starts with a vowel and one that starts with a ‘w’?

Well, turns out this is where I run into the first big challenge of this project.

Pig Latin and Word Collisions #

To sum this problem up, lets take a look at two words: eight, and

weight.

Let’s start by running both through a Pig Latin translator to see what they turn

into. First up is eight; it starts with a vowel so we just add “way” to the

end and get eightway. Next is weight; it starts with a consonant, so we move

that to the end and add “ay”, this gives us… eightway.

Turns out there are other English words that translate into the same encoded Pig Latin word, too. So what does this mean for our ability to decode Pig Latin?

Well… hrmm. Before I make a decision, I want to figure something out.

Basically, I’d like to figure out rougly how many words in the English language

encode to the same Pig Latin word. I’ve already figured out a few; eight &

weight become eightway, arm and warm both become armway.

First up, I need a big-ass list of as many English words as I can find. Instead of just downloading a single list and using that, I decided to combine a few sources. The first was a word list I downloaded from wordgamedictionary.com, the second was a list I downloaded from this web page, and the last I scraped from bestwordlist.com with a quick Go script.

Then I used some command line tools to combine the lists, filter out duplicates,

and remove “bad” words. In this case I don’t care about swear words, but each

list has words that aren’t really words. For example one “word” I found

was hexaenoic␣acid, another was Heywood␣Jablome9. Another one I found was

hey␣rube hey␣Rube, which seems to be how the data source handled alternate

versions of a single word – have them on the same “line” with a space

separating them.

So first up, split words on that ␣ character as well as spaces, putting the

split parts on new lines. For example hey␣rube hey␣Rube would become two

lines: hey␣rube and hey␣Rube. Then I looped over the list again and further

split words on characters like ␣. Then I downcased everything and sorted the

list and removed any duplicates.

Next, I wrote a little script that would read each word from our new “prime word

list” and encode it. It would use that as a key to a map[string][]string, and

put the original word in the slice. This way I end up with a map of each Pig

Latin encoded word and all the words that encode to that Pig Latin word. Then I

printed out each encoded word that had more than one English base word and

removed the obviously silly words like “winwards”.

What did I find out?

Well, for one, at least one of these word lists came from scraping the web. How else did I end up with “winwards” and “wambulances”? I also found “okas wokas” which sounds like a line from a rage comic.

Anyways, what I ended up with was a list of 94 Pig Latin words that could be

produced by more than one English word when the suffix is -way. There were a

few commonalities between these words. Here, check out some examples and see if

you can spot how these words relate to each other:

| A | B | Pig Latin |

|---|---|---|

| artless | wartless | artlessway |

| and | wand | andway |

| easel | weasel | easelway |

| ebbed | webbed | ebbedway |

| in | win | inway |

| ok | wok | okway |

| orks | works | orksway |

In each case word B can be created by taking word A and slapping a ‘w’ on the front.

Which makes sense, right? For words that start with a vowel we throw

“way” on the end as the suffix, and words that start with ‘w’ also append

‘way’. For every letter other than ‘w’, this is fine because the end of the

word is <letter>-ay like ‘day’, ‘say’, ‘fay’, ‘pay’, etc.

Okay, And? #

This doesn’t help me figure out a way to see when we’ve run into one of these

“ambiguous” Pig Latin words, though. Starting from a word like axesway there

is still no way to tell if the original was axes or waxes.

Now, I could change how I encode Pig Latin. For example, I could change the

code so that the suffix includes a dash – but it gets attached after the

consonant has been moved. That way a word like ok becomes ok-way and wok

becomes okw-ay. While this would make decoding super simple, I’m not a

fan. Mostly because I kind of want the encoding to be hard to spot; seeing -ay

and -way all over the encoded text would make it pretty easy to figure out how

to decode it.

Are there other ways I could change how English gets encoded to Pig Latin? Well, looking at the Wikipedia page on Pig Latin it turns out our rules are one of a few variations on how to encode Pig Latin; maybe one of the other versions will work better?

For example, one alternative is to use a different suffix for words that start with a vowel. I’m using ‘way’ as the suffix, alternates are ‘yay’ or ‘hay’. Unfortunately, those will probably have about the same size collision space – or worse. I can’t tell until I produce some output though, so give me another hour and I’ll see how ‘yay’ and ‘hay’ perform compared to ‘way’.

After putting in some work, I’ve got the collisions I care about for each of these suffixes: ‘-hay’, ‘-way’, and ‘-yay’. They’re a bit long, so I threw them up in a gist if you want to see them. It turns out there are differences in how many collisions each suffix produces. ‘-way’ comes in first with 94 collisions, ‘-hay’ is next with 78, and ‘-yay’ is last with… 28!

I could probably even remove a few from the lists; I doubt many people are using ‘yoctograms’ or ‘yorkish’ that often. After removing a few more less likely words, I end up these final totals: ‘-way’ has dropped to 59, ‘-hay’ dropped to to 37, and ‘-yay’ dropped to 15!

Does this help me make a decision on what to do about decoding Pig Latin? Well,

not really. I did a bit more playing around while I was doing this, and found

out there are a few suffixes that produce NO collisions but they’re suffixes

like -aay or -eay. When it comes to making a decision on how to proceed,

there’s still two things I need to figure out: how accurate I want the decoding

to be, and how much I want to stick to the original rules of the challenge.

Can I Get Accurate Decoding? #

A better question might be this: do I want perfect decoding or do I want best-effort decoding?

For an example of what “best-effort” could look like, take this sentence:

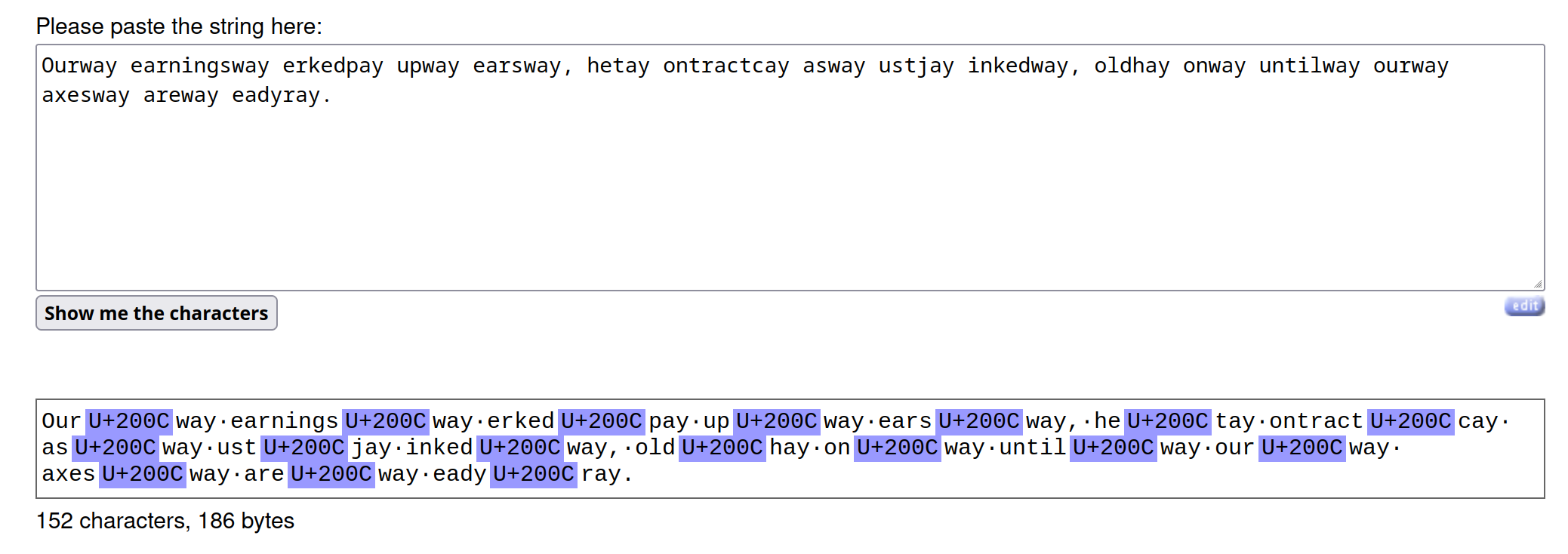

Our earnings perked up ears, the contract was just inked, hold on until our axes are ready.

In the table below you can see the words in the sentence that have ambiguous

decodings for the suffixes -way, -hay, and -yay. I’m going to put an ‘x’

where the word doesn’t have an ambiguous way to decode.

| Original | -way | -hay | -yay |